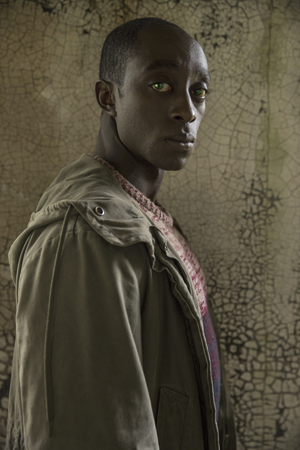

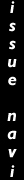

Ivanno Jeremiah as Max from AMC and Channel 4's Humans (Season 2, Episode 1 - Photo Credit: Colin Hutton/Kudos/CH4/AMC). Humans examines a world in which general artificial intelligence, in the form of "synths," begins to become self-aware.

"In a world where artificial intelligence is everywhere ..." This tagline might make audiences shiver, immediately bringing to mind the dangers of Skynet and Terminators. Or perhaps it would make them smile, if they instead thought of the more hopeful vistas of Asimov and

Star Trek. Or thoughtful, if challenged by the more ambiguous visions of human-like AI as it is treated in shows like

Humans. But, while the tagline has become reality, what we have now is not Mr Data but just "big data"--AI has become a powerful tool in analyzing data and predicting outcomes, and sometimes it seems that all machine learning, even that which begins to mimic human neural networks, will be bent toward that end. So are we free of a future where AI overlords decide that humans are too fallible? Are we completely in control of "AI" as it has been redefined and advertised? Is the idea of a rampaging Terminator--or helpful Mr Data--a children's tale to be forgotten?

So What Is It? What Is AI Nowadays?

"What generation are you?" --JF Sebastian,

Blade Runner (1982)

After an "AI Winter" lasting from the 1980s and into the early 90s, when disappointment loomed large over many AI projects (especially the promise of any sort of generalized human-like intelligence), a combination of advances in computer power and continuing advances in machine learning (e.g., artificial neural nets) began a thaw in which researchers first managed to create systems that could beat human players in games (backgammon, chess) and then, partly through the massive amounts of data that began to be gathered in the early 2000s and partly through refining machine learning methods (such as moving away from "brute force"; for example, not having to process every possible chess move projected out into the future of the current game, every turn), rapidly moved on to natural language processing, "looking" at images to try to find similarities and categorize them, and even drive cars. Other than this last bit (which possibly brings to mind the robotic cab drivers in

Total Recall), the development has been away from anything resembling the "strong" AI of the past. This might be because trying to create a general human-like intelligence is just way too hard (see our interview with Janelle Shane in this issue). It might also be because the money is in the analytics.

AI Media Relations

While data-crunching AI is everywhere, the media still overwhelmingly focus on general artificial intelligence--most often to try to scare us, as I imagine that creates a bigger box office return. I feel I've been saying this for decades, and it's really almost a cliché, but ... Even now, Fox is about to air Next, which from the commercials is all about an escaped AI that wants to destroy all humans (it could surprise me, I guess ... as of this writing it has yet to air). So from Universal's Frankenstein when cinema was young through the Terminator and Skynet through today, we've been bombarded with negative imagery. (Which seems to be the way of things ... go negative, reap the reward. Fear is easier to reach than thoughtfulness.)

But there have been more nuanced approaches. Some, like Forbidden Planet (1956) or Lost in Space (1965-68), posit humanoid robots that are more computer than AI, but are still treated as just one of the crew. The Alien franchise has both good and bad "synthetics" that by turns either disregard the crew's safety for the good of the corporation or help in the fight against it.

Blade Runner (1982) is the seminal film (for me, anyway) regarding replicants and what the ethics/dangers/etc. are when we create human-like life forms. They are truly more human than the human characters, often more emotive and philosophical, and it can be argued that the real POV character is the replicant Roy Batty, not Deckard. Are they dangerous? Yes. But they are also just trying to survive, and to try to live beyond their artificially mandated four-year lifespan. Sadly, Blade Runner 2049 (2017) is more about its own visuals and less about continuing the exploration of replicants.

Lots of anime explore the same kinds of issues, albeit sometimes leaning into the dangers of general AI (such as Sharon Apple in Macross Plus [1994-95]), but often with far more acceptance of AIs as part of the world around us, such as in Astro Boy (many versions, many dates), or exploring topics such as AI reproduction, as in Armitage III (1995).

Star Trek: The Next Generation (1987-94) also explores AI, albeit for the most part with only one unique test case, Mr Data. Data is presented as trying to become more human through observation and experience, trying to learn to play music with human instincts and always wondering what it would be like to have "real" emotions, which he does not (to begin with, anyway). It is probably the friendliest Western series to AI ... and possibly doesn't explore the true nastiness humans might really feel toward such a creation, stronger and smarter than they.

More recently, TV series like Humans (2015-18) have used the extended format to really explore not only the ethics of creating even not-quite conscious AIs (and also the fully conscious kind), but also human reactions to these technologies. Humans is not without its own issues, but it is one of the best shows I've seen actually exploring what it means to be one of these synths, or to be one of their creators, or to be just a regular human living in a world with such extensive man-machine interfaces. I'd like more shows like this, which deal with very human issues that can teach us about ourselves even if we never develop "more human than human" AIs.

So while we are still laughably inept at creating chat bots that can pass a simplified Turing Test (unless you accept premises such as "the chat bot is a child that doesn't speak your language very well and is very eccentric and is absolutely fascinated by giraffes"), and only Hanson Robotics' Sophia and some Japanese idol software, such as the vocaloid Hatsune Miku, has begun to look or sound like "AI" (and this still relying heavily on human input), "AI" is booming because it is now marketed as a predictive analytical system that can go through the kinds of massive amounts of data that are collected online and create perfect advertising aimed at potential customers, tell you exactly what kinds of political winds are blowing, and even tell you what's in a photograph or create a photograph of its own. And still beat us at games.

Hatsune Miku, vocaloid/virtual idol, has had many "live" concerts (Crypton Future Media, Inc.)

All of which are massive advances, and I'm not belittling them in any way, except that, oddly, despite a rich and long history of public perception that any sort of advanced AI will kill us all, rampaging like Frankenstein's monster or the Golem of old and destroying the countryside (something we have proven perfectly capable of doing on our own, thank you), marketers are attempting to anthropomorphize this data-driven AI. In commercials for IBM's Watson, for example, the disembodied voice holds a very human conversation while also solving difficult problems for its clients. Ads for Siri and Alexa are similar.

That creates problems in our perception of what these "AI" systems can do, and what we should allow them to do. Should we allow Alexa and Siri to gather data from our living rooms (and bedrooms, and children's bedrooms)? Where does this data go, and what's done with it? (This isn't necessarily any sort of problem with the AI, though--it's what the humans on the other end are doing with it, e.g., Cambridge Analytica.)

Cute little helpers/potential data-gatherers, the Amazon Echo Dot for kids (Amazon.com, Inc.)

Data analysis and AI

"All data that exists is both reality and fantasy"--Batou,

Ghost in the Shell (1995; as translated in the Special Edition, 2004)

In Janelle Shane's book

You Look Like a Thing and I Love You, she discusses the many ways in which AIs can use data to go subtly or horribly wrong. For example, Nvidia created a GAN (Generative Adversarial Network, a type of neural network) called StyleGAN that they trained to create relatively photorealistic human faces--but when they aimed it at cats, it created multi-limbed several-eyed monsters. Things that a human almost takes for granted--like sorting out where the cats are in an image, and which things are cats, no matter what angle or how big they are, or whether there's a human in the image as well or text overlaying it--our current AI algorithms just cannot do very well. But companies (and people generally) like to talk about these AI algorithms as "seeing" something, or worse, sometimes claiming that the AI is "thinking" or "understanding" something. We have not yet come anywhere near an AI that thinks or understands, except in the most limited possible of ways.

(Although being me I can easily imagine a scifi scenario where I'm writing this and there's an AI out there right now thinking, "This guy just doesn't understand me ...")

What we have done, in a sense, is teach these algorithms very specific things--such as how to pick out a human face when all human faces are in the same position, lighting, etc. Interestingly, we have trouble understanding these AIs--in many ways, the neural networks we have created, limited though they are, are a sort of black box: we know the inputs, we know the outputs, but the steps in between are often only our best guesses--the AI itself builds and destroys connections between its neurons based on whether it gets more or less positive reinforcement (right answers) as we tell it what the outputs should be, and much like a human brain, we don't know which synapses have fired or in what way they all connect.

And unfortunately, one of the big things we've managed to teach AIs is how to be biased like humans--this is what it has "learned" from the data we have fed it. Ultimately we have trouble understanding how to "fix" our own data so that biases aren't in the inputs; for example, if AI were used to "correct" student grades that might be inflated due to teacher bias (as was almost done in England recently), it could be fed data relating to average student grades in certain geographical areas over the last several years--but these inputs are also indicative of which schools serve poorer areas, and reinforce bias against anyone living in these circumstances, while rewarding wealthy families just for living near better schools. Since we haven't given the AI any "class" or "racial" data, we think (or hope, or wish for marketing purposes) that it can't be biased. Basically, it's able to ferret out institutionalized classism and racism in data we think are absolutely fine, and since we don't know what its internal processes are, we have trouble with more subtle biases in the data that we can't see because we don't know how the AI will decipher it in its quest (usually single-minded) to answer whatever question we've put to it.

Will we ever get Strong AI like we were promised/warned?

"Commerce is our goal here ..." --Eldon Tyrell,

Blade Runner (1982)

Given our inability to program algorithms that can do more than one very specific task well, it would seem that we are still a long way from "strong" or general AI; in fact, in some ways it feels as though we are just as far away from it now as we were in the 1990s, unless there's a secret project out there somewhere (which I wouldn't bet against). In some ways we're much better at some of the component parts (natural language processing, analyzing inputs, even being able to read human expressions), but these are all surface things--the language processing hasn't lead to actual understanding except in very limited, computer-like ways, and the reading of human expressions is in some ways just another image-processing task, and again what the AI does with this information is still very limited and not driven by empathy or understanding.

But really the big companies don't appear to be focused on any sort of strong AI systems, and the big reason for this would be commerce--whatever upside there might be to creating human-like AI is dwarfed by the downside--the amount of money spent in the past for such projects with relatively little to show for it, and really having no reason to want it. Why have more human-like workers when you have plenty of those, and when much more limited machines can replace these workers without any concerns over welfare? Machines don't worry about overtime, rights, desires, sleep, etc. You would expect a strong AI to bring up all of these (well, maybe not sleep; recharging periods, perhaps).

Sophia, by Hanson Robotics, one of the best examples of the combination of humans and robotics that we have today; she works from human-created scripts to get her point across. (from Hanson Robotics, "Humanizing AI" web page)

(In some countries, like Japan, where there are sometimes shortages of available humans for some kinds of work, there have been more advances and more acceptance of human-like robots--but these are human-like in appearance, and only in a limited way in action. But they seem much more comfortable with the idea of strong [or stronger] AI systems, and at this point seem the furthest along in that regard.)

So the most likely origin of strong AI, if it happens at all, may be in a university setting (although this becomes less and less likely the more universities are aligned with commerce), accidentally in some application where one needs some degree of individualized problem-solving (unfortunately, this may be most likely in military applications, and then you can definitely say hello to Skynet), or in, of all places, video games.

Games without Frontiers

"Want to play a game?" --WOPR,

War Games (1983)

My own development work in AI features giving non-player characters in role-playing games human-like personalities. These are based on real-world personality theories, primarily the Big Five as developed by Costa and McCrae (1995, etc.). Game characters are scored as people would be across a set of thirty facets, and these facet values can change over the course of the NPC's life given the same elasticities as a real-world human.

Part of this work also deals with more transient emotions and feelings, and how NPCs might develop different feelings toward different people (anything from simple dislike to jealousy to love; any emotion is possible), and even perhaps the relation of morality to personality and personal environment.

My own attempts are not the first time game characters have been given emotion ratings or like/dislike ratings (see, for example, the social stats in the Persona series), but it is one of the first times characters have been given potentially realistic and complete personality structures. Usually (and still for most games) this is unnecessary, and because games have many different elements (graphics, story, gameplay, etc. in addition to various types of AI, including pathfinding), only the most necessary are included; if you're not going to meet NPC Sara Shopkeeper at various times over the course of her life and have long chats with her that include something other than the price of armour, the subtleties of her personality development aren't going to matter one whit to gameplay and will take up CPU cycles unnecessarily.

But as a development world for these kinds of AI, games offer the perfect alternative to testing complex "strong AI" systems in the real world--one is neither unleashing potentially insane personality structures into the real world where they can commit murder, nor challenging public perceptions of what an AI should or should not be. It's like being able to develop AI within a scifi story, one that might eventually be written in cooperation with the NPCs themselves, but still contained in a world apart from our own. (There are ethical issues with this--is creating a human-like AI that is trapped in a game world any better than creating it in the real world, in terms of limiting this creature and in some ways making it a puppet?)

This has been done for a very long time, often given the moniker "simulation" rather than "game"; for instance, there are sims that test simple evolutionary structures, giving "creatures" needs like eating, drinking, and reproduction. Examples of this include the very aptly named and classic

Creatures and many more you can find through a simple internet search.

But while it might be useful to see if androids really might dream of electric sheep within a contained environment where their nightmares won't have real-world effects, there is still the matter of ethics.

The Ethics of AI

"Now I know what it feels like to be God!"--Victor Frankenstein,

Frankenstein (1931; line originally drowned out by thunder due to censors)

Whether it's in a game or not, and whether by accident (Project 2501 in Oshii's

Ghost in the Shell) or on purpose (Humans, although it's sort of an on-purpose accident), there are as many ethical problems that need to be addressed as there are technical problems in creating such a being (well, that's a bit hyperbolic, but still ...).

To protect ourselves from AIs that decide humanity is a virus to be got rid of, we could use something like Asimov's famous Three Laws (1. A robot may not injure a human being or, through inaction, allow a human being to come to harm. 2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law. [from "Runaround" in

I, Robot, 1950]). But does this create a slave class? Are we merely using code to replace mental programming to keep people/AIs in their place? And what place is that? Who gets to decide this? You can bet that the mass of humanity will have little choice in the matter; those in power will decide, and advertise/influence/program the rest of us to agree.

Of course, as humans we have trouble figuring out what "ethics" are, and "morals" are equally slippery. An AI created by someone on the Far Right is going to have a very different idea of what it is to be/live/work as a human than one created by someone on the Far Left, and ideas like "justice" will have different meanings as well. We can hope that any such AI we create will be able to decide for itself the meanings of these terms (hoping, I guess, that what is truly "good" wins out ... whatever that means), but our own experiences with educating human beings should be enough to show us the folly of this.

I mentioned that simulations offer us a possibly palatable alternative to creating this AI in the real world, but honestly, doing this only in video games still begs the question. So you've created self-aware beings that are trapped inside a video game? Is that somehow ethically better? Are you not still playing God?

And then there's porn.

Humans explores this (in part; it explores a lot else, too); one of the self-aware "synths" goes into hiding in a brothel populated by non-self-aware synths, and the experience has long-term effects on her mental well-being; and another synth has a secret "code" that can be input by the owner to change her from nanny to sex-bot. Given that every technological advance seems to have a porn-y element to it (the Internet, anyone?), it's probably certain that some of the first mass-produced humanoid robots will be used in this way. How will they be programmed? How "realistic" will they become? And will this become one of the ways in which accidental strong AI is achieved? This would be awful, for them and for us, and you would no more want AIs to become aware of their enslavement to human depravity than you would those whose sole purpose is military destruction. (For that matter no one should want AIs that are enslaved to human sexual desire even if they are unaware of it, although the Internet seems to show that humans are perfectly happy to go down this route when they can get away with it, when they can do so anonymously.)

Perhaps that is the most pressing thing: we have to develop ethically ourselves, become "more human than human" before we go create human-like beings of our own, unless we're lucky enough to create creatures who teach us all of this themselves, somehow. But the odds are against us creating a group of Buddhas, and the odds are even more against us doing so and then listening to them. In a way, the longer it takes us to create generalized AI, the better; it gives us a chance to catch up.